convergence_tools Namespace Reference

Functions | |

| def | next_pow_two (n) |

| def | autocorr_func_1d |

| def | auto_window (taus, c) |

| def | ensemble_autocorr |

| def | mean_ess |

| def | read_elklhd (lname, thin, warmup_frac) |

| def | read_echain |

| def | load_erun |

| def | join_echains (echains) |

| def | mean_echain (echain) |

| def | var_echain (echain) |

| def | ranknormalized_chains (chains) |

| def | fold_chains (chains) |

| def | bulk_split_rhat (chains) |

| def | split_rhat (chains) |

| def | _rhat (chains) |

| def | find_eautocorr (echain) |

| def | plot_rank_hist |

| def | save_ensemble_diagnostics |

Detailed Description

Some guidlines for the functions here This contains the functions we are currently using for the convergence tools in Themis. Since we are using ensemble samplers to find the autocorrelation time (ACT) I typically use the integrated ACT (IACT) were the ACT is calculated for each chain and then averaged. The interesting questions then becomes for the ESS which is usually the number of steps divided by the IACT. This is important because the monte carlo error for the chain estimates are functions of the ESS NOT THE NUMBER OF STEPS. Futhermore, finding the total ESS across all chains is not simple. This is because the walkers are all correlated so you can't really just add them. This is something I need to look further into Additionally in the future if you use multiple INDEPENDENT RUNS, then you should compute the Rhat statistic between the runs. If the Rhat is > 1.05 then the chains are not converged and you should restart the runs and run them at least a factor of 2 longer.

Function Documentation

|

private |

Utility function Computes the rhat between the chains. This only assumes that we are using a single chain. If using an ensemble method please first convert it into a single chain by taking the expectation of a function, i.e. a mean, across the walkers.

Here is the caller graph for this function:

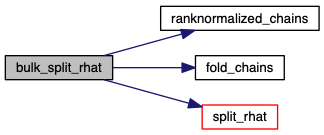

| def convergence_tools.bulk_split_rhat | ( | chains | ) |

Computes the bulk rhat using rank normalized chains. The returned value is the

maximum of the folded rhat and regular rank normalized rhat as recommended in Vehtari 2019.

Parameters:

- `chains` is an single walker chains, with indexing runs,steps,params

Returns:

- split rhat statistic from Gelman 2013 for each parameter in the chain using rank-normalized values.

Here is the call graph for this function:

Here is the caller graph for this function:

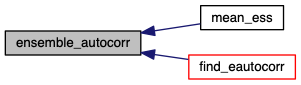

| def convergence_tools.ensemble_autocorr | ( | param_chain, | |

c = 5.0 |

|||

| ) |

Computes the integrated autocorrelation time for the chain, using emcee's formula. `param_chain` is the chain for a parameter over n walkers, so the `param_chain` shape is walkers,steps

Here is the caller graph for this function:

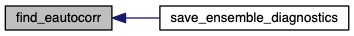

| def convergence_tools.find_eautocorr | ( | echain | ) |

Finds and returns the IACT for each parameter in the ensemble chain.

Here is the call graph for this function:

Here is the caller graph for this function:

| def convergence_tools.fold_chains | ( | chains | ) |

Returns the chain folded around the median of all the chains. This helps with measuring rhat in the tails

Here is the caller graph for this function:

| def convergence_tools.join_echains | ( | echains | ) |

Join a list of ensemble chains from separate runs. If chains have a different number of steps then the largest even common size is used to join them.

| def convergence_tools.load_erun | ( | cname, | |

| lname, | |||

| thin, | |||

plist = [], |

|||

warmup_frac = 0.5 |

|||

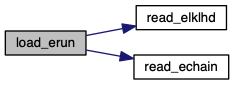

| ) |

Loads the ensemble chain and likelihood files

Params:

- cname<str> Is the name of the chains file

- lname<str> Is the name of the lklhd file

- thin <int> Is the thinning of the lkhd and chain

- plist<arr<int>> Is the list of parameters to read in, e.g. [1,2,5] will read in the first second and 5 parameter from the chain file.

defaults to [] which reads in all the parameters.

- warmup_frac Is the fraction of the chain to remove due to warmup. The default is 0.5 so the first 50% of the chain will be removed.

Returns:

chain, lklhd

Where the chain is a 3d array using the walker,steps,param indexing.

Here is the call graph for this function:

| def convergence_tools.mean_echain | ( | echain | ) |

Computes the average of the ensemble chain for each step. That is, we average over the walkers at each step converting the chain into a single particle chain of the average.

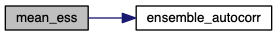

| def convergence_tools.mean_ess | ( | y, | |

c = 5.0 |

|||

| ) |

Calculates the average ESS across chains by dividing the number of steps by the IAC. Note the actual ESS of the entire chain and walkers is > than this, but likely isn't the number of walkers * mean_ess since the chains are correlated.

Here is the call graph for this function:

| def convergence_tools.plot_rank_hist | ( | chains, | |

bins = 30, |

|||

names = None |

|||

| ) |

Computes the rank plots, where the rank is taken over all the runs. The histograms should be roughly uniform. Any structure in them signals that the chains aren't converged or mixing well, i.e. your Monte Carlo estimates will be biased. Chains must be the chains for a specific parameter over multiple runs with indexing run,steps

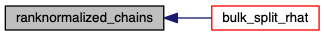

| def convergence_tools.ranknormalized_chains | ( | chains | ) |

Computes the rank-normalized chains, where the rank is taken over all the chains. This allows rhat to be used when we the distribution might not have a finite mean or variance. Returns the rank normalized chains, i.e. the z-scores and the actual ranks.

Here is the caller graph for this function:

| def convergence_tools.read_echain | ( | cname, | |

| nwalkers, | |||

| nskip, | |||

| thin, | |||

plist = [] |

|||

| ) |

Reads in a Themis chain from the ensemble methods. Arguments: - `cname` : string of the file containing the chain. - `nwalkers` : number of walkers used in the ensemble sampler - `nskip` : number of MCMC steps to skip, i.e. warmup period. - `thin` : controls how much we want to thin the chain, so every thin step will be stored. Keyword arguments: - `plist` : list of integers of parameters to read in. Default is [] which reads in every parameter Returns: chain file with dimensions step,walker,param indexing.

Here is the caller graph for this function:

| def convergence_tools.read_elklhd | ( | lname, | |

| thin, | |||

| warmup_frac | |||

| ) |

Reads in a themis likelihood file, `lname` storing only the 1-`warmup_frac` part for every `thin` steps. Returns the lklhd file in an 2D array with dimensions stepsXwalkers, the number of steps that were skipped and the number of COMPLETED steps.

Here is the caller graph for this function:

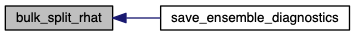

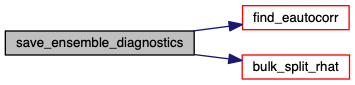

| def convergence_tools.save_ensemble_diagnostics | ( | out, | |

| chains, | |||

| chainsname, | |||

method = "rank", |

|||

nthin = 1 |

|||

| ) |

Outputs the diagnostics of the multiple chains in `chains` to the out file, where the chain is kept track of in `chainsname`. Currently it only outputs the chains integrated autocorrelation time for each parameter. Eventually other diagnostics will be included, such as the Gelman-Rubin diagnostic, i.e. split-rhat, and ESS. Currently the default method for rhat is the rank method from Vehtari 2019. Note that if IACT is "significantly different" between chains, then at least one of the chains isn't converged!. nthin is included the multiple the IACT of the `chain` passed.

Here is the call graph for this function:

| def convergence_tools.split_rhat | ( | chains | ) |

Computes the split rhat statistic from Gelman 2013. This is able to deal with distributions that don't have finite mean and/or variance. Additionally it splits the chain in two in order to check if the beginning and end of the chain have similar variance to check for intrachain convergence.

Parameters:

- `chains` is an single walker chains, with indexing runs,steps,params

Returns:

- split rhat statistic from Gelman 2013 for each parameter in the chain.

Here is the call graph for this function:

Here is the caller graph for this function:

| def convergence_tools.var_echain | ( | echain | ) |

Computes the variance of the echain for each step. That is, we compute the variance of the walkers at each step, converting the chain into a single particle chain of the variance between chains.